Leveraging JSON in PostgreSQL 17

PostgreSQL 17 introduces a game-changing feature for JSON handling - full support for the SQL/JSON standard. If you're working with JSON data in your database, this is a major upgrade that simplifies complex operations and brings PostgreSQL's JSON capabilities to a new level. Let's dive into what makes this so exciting for developers and database engineers.

What's new with JSON in PostgreSQL 17?

While PostgreSQL has supported JSON data types since version 9.2, PostgreSQL 17 brings something truly special - comprehensive implementation of the SQL/JSON standard. This isn't just another PostgreSQL-specific feature; it's an industry standard implementation that may eventually work across multiple database systems.

The key advantage here is standardization. These new functions and operators follow established SQL/JSON patterns, making your code more portable and future-proof. Even if you're already comfortable with PostgreSQL's existing JSON functions, the new standard functions offer improved capabilities and cleaner syntax.

JSON constructors: Building JSON values

Let's start with the basics. PostgreSQL 17 introduces several new constructors for creating JSON values:

JSON constructor

The simplest constructor, JSON(), takes content and turns it into a JSON value:

SELECT JSON('12345'::text);

-- 12345

SELECT JSON('"my_text"');

-- "my_text"One particularly useful feature is validation for duplicate keys:

SELECT JSON('{"mykey": 111, "mykey": 222}' WITH UNIQUE KEYS);

-- Error: duplicate JSON object key valueJSON_SCALAR constructor

When you need proper JSON scalar values (with appropriate quoting), use JSON_SCALAR:

SELECT JSON_SCALAR(1); -- 1 (unquoted)

SELECT JSON_SCALAR(-12.34); -- -12.34 (unquoted)

SELECT JSON_SCALAR(true); -- true (unquoted)

SELECT JSON_SCALAR('my_text'); -- "my_text" (quoted)

SELECT JSON_SCALAR(now()); -- "2025-04-17T18:49:43.436927+00:00" (quoted)JSON_ARRAY constructor

Need to create JSON arrays? The JSON_ARRAY constructor offers two modes of operation:

-- List of values mode

SELECT JSON_ARRAY('my_text', true, now());

-- ["my_text", true, "2025-04-17T18:50:02.873228+00:00"]

-- Query-based mode

SELECT JSON_ARRAY(SELECT datname FROM pg_database ORDER BY datname);

-- ["postgres", "template0", "template1"]You can control how NULL values are handled:

-- Default (ABSENT ON NULL) skips nulls

SELECT JSON_ARRAY('my_text', NULL, 'test');

-- ["my_text", "test"]

-- NULL ON NULL includes nulls

SELECT JSON_ARRAY('my_text', NULL, 'test' NULL ON NULL);

-- ["my_text", null, "test"]JSON_ARRAYAGG aggregator

To build JSON arrays from query results, PostgreSQL 17 provides JSON_ARRAYAGG:

SELECT JSON_ARRAYAGG(datname ORDER BY length(datname) DESC)

FROM pg_database;

-- ["template1", "template0", "postgres"]Like JSON_ARRAY, you can control NULL handling with NULL ON NULL and specify the return type with RETURNING jsonb.

JSON_OBJECT constructor

The JSON_OBJECT constructor creates JSON objects with a clean, readable syntax:

SELECT JSON_OBJECT(

'a' VALUE 123,

'b' : true,

'c' VALUE now(),

'd' : JSON_ARRAY(100, 20.0, 'my_text')

);

-- {"myarr" : 123, "b" : true, "c" : "2025-04-17T18:51:18.211981+00:00", "d" : [100, 20.0, "my_text"]}Notice that you can use either VALUE or : to separate keys from values - both styles work.

JSON_OBJECTAGG aggregator

Similar to JSON_ARRAYAGG, the JSON_OBJECTAGG function aggregates rows into a JSON object:

SELECT JSON_OBJECTAGG(datname VALUE oid RETURNING jsonb)

FROM pg_database;

-- {"abcd": "16388", "postgres": "5", "template0": "4", "template1": "1"}Testing JSON values

PostgreSQL 17 introduces expressions for testing if a value is proper JSON:

SELECT 'my_text' IS JSON VALUE, -- false

JSON_SCALAR(now()) IS JSON SCALAR, -- true

JSON_SCALAR(now()) IS JSON ARRAY, -- false

JSON_ARRAY(1, 2, 3) IS JSON ARRAY, -- true

JSON_OBJECT('key': 'value') IS JSON OBJECT;

-- true

-- ?column? | ?column? | ?column? | ?column? | ?column?

-- ---------+----------+----------+----------+----------

-- f | t | f | t | tYou can also check for unique keys throughout the entire structure:

SELECT JSON_ARRAY(1, 2, JSON_OBJECT('mykey': 1, 'mykey': 2)) IS JSON ARRAY WITH UNIQUE KEYS;

-- will return false

Query functions: Extracting and manipulating JSON

Now we're getting to the powerful part - extracting data from JSON structures.

JSON_EXISTS function

Use JSON_EXISTS to check if a JSON path expression matches any value:

SELECT JSON_EXISTS('{"key": "value", "myarr": [1, 2, 5]}', '$.key'); -- true

SELECT JSON_EXISTS('{"key": "value", "myarr": [1, 2, 5]}', '$.smth'); -- false

SELECT JSON_EXISTS('{"key": "value", "myarr": [1, 2, 5]}', '$.a[5]'); -- falseYou can control error handling with TRUE ON ERROR, FALSE ON ERROR, or UNKNOWN ON ERROR.

JSON_QUERY function

JSON_QUERY extracts portions of JSON based on path expressions:

SELECT JSON_QUERY('{"key": "value", "myarr": [1, 2, 5]}', '$.key');

-- "value"You can handle empty results with ON EMPTY clauses:

SELECT JSON_QUERY(

'{"key": "value", "myarr": [1, 2, 5]}',

'$.a[*] ? (@ > 50)'

WITH ARRAY WRAPPER

DEFAULT '["notfound", "found"]' ON EMPTY

);

-- ["notfound", "found"]The star of the show: JSON_TABLE

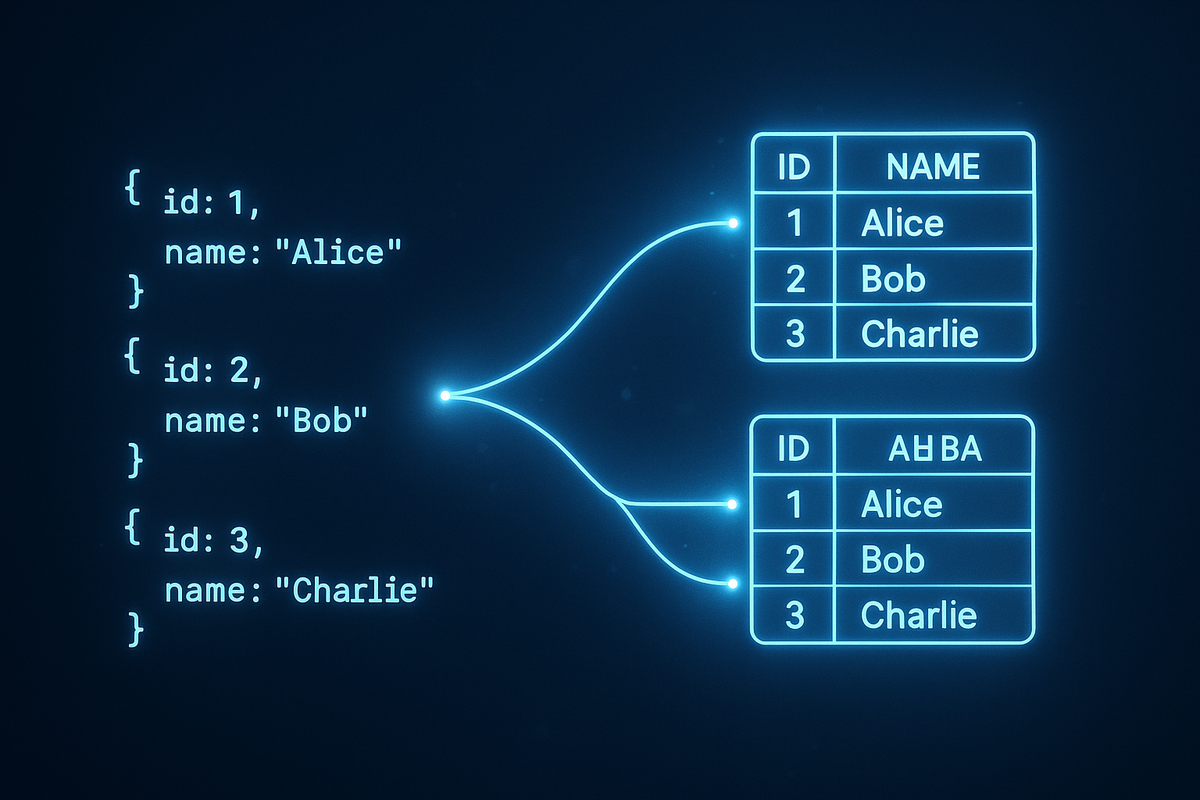

The most powerful new feature is JSON_TABLE, which converts JSON data into relational rows and columns - perfect for bridging document and relational data models.

Basic JSON_TABLE usage

Let's see a simple example:

SELECT *

FROM JSON_TABLE(

'[{"myarr":1,"nextkey":2},{"myarr":3,"nextkey":4}]',

'$[*]'

COLUMNS (

column_a int4 PATH '$.myarr',

column_b int4 PATH '$.nextkey'

)

);Result:

column_a | column_b

----------+----------

1 | 2

3 | 4

You can add row numbers with FOR ORDINALITY and use shortened column definitions:

SELECT *

FROM JSON_TABLE(

'[{"myarr":10,"nextkey":20},{"myarr":30,"nextkey":40}]',

'$[*]'

COLUMNS (

id FOR ORDINALITY,

column_a int4 PATH '$.myarr',

column_b int4 path '$.nextkey',

myarr int4, -- Implicit path '$.myarr'

nextkey int4 -- Implicit path '$.nextkey'

)

);Result:

id | column_a | column_b | myarr | nextkey

----+----------+----------+-------+---------

1 | 10 | 20 | 10 | 20

2 | 30 | 40 | 30 | 40

Working with nested structures

For nested JSON structures, use the NESTED PATH feature:

SELECT schools.*

FROM JSON_TABLE(

'[

{

"teacher_name": "John Smith",

"payment_method": "Umbrella Company",

"user_id": 101,

"feedback": [

{"author": "School A", "rating": 4, "comment": "Excellent teaching skills"},

{"author": "School B", "rating": 5, "comment": "Great collaboration"}

]

},

{

"teacher_name": "Anna Johnson",

"payment_method": "Direct Payment",

"user_id": 102,

"feedback": [

{"author": "School C", "rating": 3, "comment": "Good class organization"},

{"author": "School D", "rating": 4, "comment": "Recommended for future work"},

{"author": "School A", "rating": 5, "comment": "Best teacher this year"}

]

}

]',

'$[*]'

COLUMNS (

id FOR ORDINALITY,

teacher_name text,

payment_method text,

user_id int,

NESTED PATH '$.feedback[*]'

COLUMNS (

school text PATH '$.author',

rating int PATH '$.rating',

comment_text text PATH '$.comment'

)

)

) AS schools;Result:

id | teacher_name | payment_method | user_id | school | rating | comment_text

----+--------------+------------------+---------+----------+--------+-----------------------------

1 | John Smith | Umbrella Company | 101 | School A | 4 | Excellent teaching skills

1 | John Smith | Umbrella Company | 101 | School B | 5 | Great collaboration

2 | Anna Johnson | Direct Payment | 102 | School C | 3 | Good class organization

2 | Anna Johnson | Direct Payment | 102 | School D | 4 | Recommended for future work

2 | Anna Johnson | Direct Payment | 102 | School A | 5 | Best teacher this year

Real-world example: Processing IOT data

Let's see a practical example using some IOT data:

WITH iot_data AS (SELECT '{

"building": "Headquarters",

"timestamp": "2025-11-15T10:00:00Z",

"sensors": [

{

"device_id": "temp-001",

"type": "temperature",

"location": "Floor 1, Room 101",

"readings": [

{"time": "2025-11-15T10:00:00Z", "value": 22.5, "unit": "C"},

{"time": "2025-11-15T10:05:00Z", "value": 22.7, "unit": "C"},

{"time": "2025-11-15T10:10:00Z", "value": 23.0, "unit": "C"}

]

},

{

"device_id": "hum-002",

"type": "humidity",

"location": "Floor 1, Room 101",

"readings": [

{"time": "2025-11-15T10:00:00Z", "value": 45, "unit": "%"},

{"time": "2025-11-15T10:05:00Z", "value": 46, "unit": "%"},

{"time": "2025-11-15T10:10:00Z", "value": 47, "unit": "%"}

]

},

{

"device_id": "co2-003",

"type": "co2",

"location": "Floor 2, Room 201",

"readings": [

{"time": "2025-11-15T10:00:00Z", "value": 420, "unit": "ppm"},

{"time": "2025-11-15T10:05:00Z", "value": 430, "unit": "ppm"},

{"time": "2025-11-15T10:10:00Z", "value": 450, "unit": "ppm"}

]

}

]

}'::jsonb AS data),

sensors AS (SELECT 'Headquarters' AS building,

jt.*

FROM iot_data,

JSON_TABLE(data, '$.sensors[*]' COLUMNS (

device_id text PATH '$.device_id',

sensor_type text PATH '$.type',

location text PATH '$.location',

NESTED PATH '$.readings[*]'

COLUMNS (

reading_time timestamp PATH '$.time',

reading_value float PATH '$.value',

reading_unit text PATH '$.unit'

)

)) AS jt)

SELECT building,

device_id,

sensor_type,

location,

reading_time,

reading_value,

reading_unit,

-- Calculate some additional metrics

CASE

WHEN sensor_type = 'temperature' AND reading_value > 23 THEN 'High'

WHEN sensor_type = 'temperature' AND reading_value < 20 THEN 'Low'

WHEN sensor_type = 'temperature' THEN 'Normal'

WHEN sensor_type = 'humidity' AND reading_value > 60 THEN 'High'

WHEN sensor_type = 'humidity' AND reading_value < 30 THEN 'Low'

WHEN sensor_type = 'humidity' THEN 'Normal'

WHEN sensor_type = 'co2' AND reading_value > 800 THEN 'High'

WHEN sensor_type = 'co2' AND reading_value < 400 THEN 'Low'

WHEN sensor_type = 'co2' THEN 'Normal'

END AS status,

-- Convert timestamp to local timezone

reading_time AT TIME ZONE 'UTC' AS local_time

FROM sensors

ORDER BY device_id, reading_time;

-- building | device_id | sensor_type | location | reading_time | reading_value | reading_unit | status | local_time

-- -------------+-----------+-------------+-------------------+---------------------+---------------+--------------+--------+------------------------

-- Headquarters | co2-003 | co2 | Floor 2, Room 201 | 2025-11-15 10:00:00 | 420 | ppm | Normal | 2025-11-15 10:00:00+00

-- Headquarters | co2-003 | co2 | Floor 2, Room 201 | 2025-11-15 10:05:00 | 430 | ppm | Normal | 2025-11-15 10:05:00+00

-- Headquarters | co2-003 | co2 | Floor 2, Room 201 | 2025-11-15 10:10:00 | 450 | ppm | Normal | 2025-11-15 10:10:00+00

-- Headquarters | hum-002 | humidity | Floor 1, Room 101 | 2025-11-15 10:00:00 | 45 | % | Normal | 2025-11-15 10:00:00+00

-- Headquarters | hum-002 | humidity | Floor 1, Room 101 | 2025-11-15 10:05:00 | 46 | % | Normal | 2025-11-15 10:05:00+00

-- Headquarters | hum-002 | humidity | Floor 1, Room 101 | 2025-11-15 10:10:00 | 47 | % | Normal | 2025-11-15 10:10:00+00

-- Headquarters | temp-001 | temperature | Floor 1, Room 101 | 2025-11-15 10:00:00 | 22.5 | C | Normal | 2025-11-15 10:00:00+00

-- Headquarters | temp-001 | temperature | Floor 1, Room 101 | 2025-11-15 10:05:00 | 22.7 | C | Normal | 2025-11-15 10:05:00+00

-- Headquarters | temp-001 | temperature | Floor 1, Room 101 | 2025-11-15 10:10:00 | 23 | C | Normal | 2025-11-15 10:10:00+00

That query is:

- Using

JSON_TABLEto extract sensor data from a JSON structure - Calculating additional metrics based on sensor readings

- Converting timestamps to local time zones

- Sorting the results by device ID and reading time

Summary

PostgreSQL 17's SQL/JSON standard implementation is a powerful addition that bridges the gap between document-oriented and relational data models. The new constructors make it easier to build JSON values, while the query functions and especially JSON_TABLE provide sophisticated ways to extract and transform JSON data.

Whether you're working with APIs that return JSON, storing document data in your database, or just need to occasionally work with structured data, these new functions make your life easier and your code cleaner. The SQL/JSON standard also ensures better compatibility with other database systems that implement the same standard.

Most importantly, JSON_TABLE transforms complex nested JSON structures into relational tables that you can join, filter, and analyze using familiar SQL tools. This brings together the flexibility of JSON with the analytical power of SQL - truly the best of both worlds.

Even more you have in the documentation - here is a brief only.

Happy JSON-ing!