Microservices are not just technology - how not to build a distributed monolith?

Perhaps you have already gone through this implementation. The microservice architecture was a great success. Adding a simple change requires modifying only thirty services, but unfortunately, you don't have enough RAM on your aluminium laptop to run all the containers... So we go back to the good old days, where once every six months there is an API compatibility conjunction and we can finally release. The only difference is that now we need to hire a full-time release manager. If this scenario sounds familiar, it means that instead of autonomous services, you have built a distributed monolith. And you are not alone in this.

The cardinal sins of setting boundaries

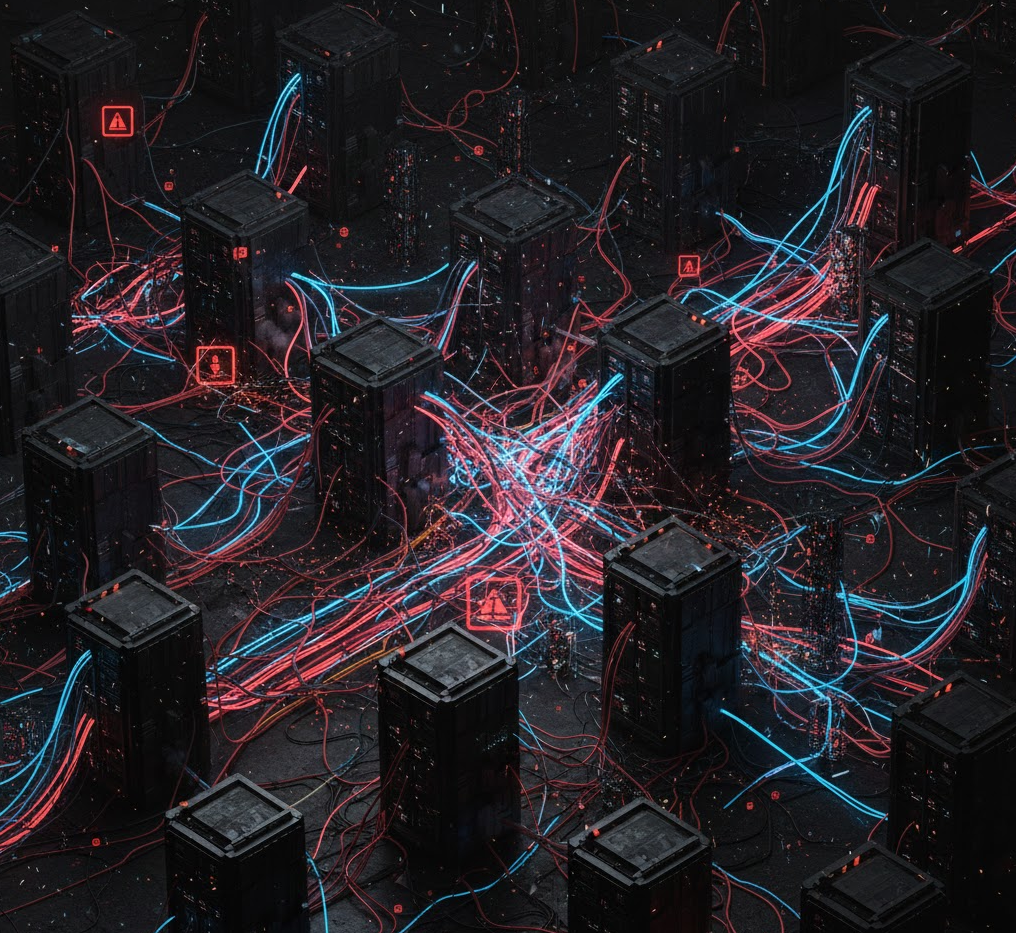

Most problems with microservices begin at the stage of drawing rectangles on the board. Usually, when an analyst and an architect sit down to work on a project, they draw a beautiful static diagram. We have boxes: "Customer", "Product", "Order". Everything looks clean and logical. We happily implement each rectangle, dealing with CRUD operations. Problems begin when the system comes to life. The static diagram lies because it hides the complexity of communication. When we apply actual network traffic to this drawing, it turns out that everyone is "copulating" with everyone else.

This phenomenon results from an approach based on nouns rather than processes. We divide the system into vertical segments corresponding to tables in the database, and then we are surprised that the implementation of a business process requires touching each of these segments. This is a classic "Shotgun Surgery" anti-pattern - one business change means that we have to sculpt the code of many modules at the same time, which drastically increases the risk of error and the cost of team coordination. If your microservices envy each other's data (Data Envy) and call each other via HTTP with every user query, then you have actually built one big system, only with very slow method calls over the network.

Single source of truth instead of dispersion

To fix this, we need to reverse the thought process. Instead of starting with data, let's start with what changes together. The key is to identify a Single Source of Truth (SSOT) for key business questions. Imagine an e-commerce system where the price of a product (or its score) depends on many factors: number of views, number of returns, complaints, and stock levels. In a naive approach, the Pricing module would query the warehouse, returns module, and analytics module synchronously with each calculation. This creates a network of dependencies where the failure of one element brings down the entire pricing process (Single Point of Failure).

A better approach is to reverse the dependencies. The Scoring module should listen for events from other systems and build its own projection of the data needed to answer the question ‘what is the price?’. This makes it the sole source of truth in this regard, and the calculation logic is contained in one place.

Let's look at an example in Ruby to see how it looks in a naive (bad) version and how it looks in an event-driven approach.

# BAD ARCHITECTURE: The Coupling Hell

# In this scenario, the PricingService needs to query multiple external services

# synchronously. If 'returns_service' is down, price calculation fails.

class PricingService

def calculate_price(product_id)

base_price = ProductService.get_price(product_id)

# Direct dependency on other services

return_rate = ReturnsService.get_return_rate(product_id)

view_count = AnalyticsService.get_views(product_id)

# Business logic scattered across network calls

if return_rate > 0.1

base_price * 0.9

elsif view_count > 1000

base_price * 1.1

else

base_price

end

rescue Faraday::ConnectionFailed => e

# Now we have to handle partial failures... nightmare.

raise "Cannot calculate price, dependency down: #{e.message}"

end

endAnd now the reactive approach, where we build a local state based on facts that have occurred in the system.

# GOOD ARCHITECTURE: Event Driven & Autonomous

# This service subscribes to events and updates its own local projection (SSOT).

# It doesn't care if other services are online when queried.

class PricingProjection

# This implies we have some persistent storage like Redis or Postgres

def initialize(storage)

@storage = storage

end

def handle_event(event)

case event.type

when "ProductViewed"

# Just increment a counter, very fast

@storage.increment("views:#{event.product_id}")

when "ReturnRegistered"

# Update return stats

@storage.update_return_rate("returns:#{event.product_id}", event.data)

end

# Recalculate and store the new price immediately or asynchronously

recalculate_price(event.product_id)

end

def get_price(product_id)

# Zero network latency dependencies here.

# We just return the pre-calculated value.

@storage.get("price:#{event.product_id}")

end

private

def recalculate_price(product_id)

# Complex logic is encapsulated here and uses only local data

end

endLeakage of abstraction in communication

Simply using a message queue does not make the system loosely coupled if we design the messages poorly. A very common mistake is sending technical events instead of business events. If you send a UserAddedToAuctionTable event, you force all recipients to understand the structure of your database. What does it mean that a user has been added to the table? Are they bidding? Are they winning? The recipient has to deduce this, which leads to duplication of business logic in many places.

If you change the table structure, you will "blow up" all listening services. This is context leakage. Instead, we should emit events that describe business facts, such as AuctionBidPlaced or AuctionWon. Then the sender can freely change their implementation as long as they emit the same business fact.

Also, avoid the temptation to send both the "old" and "new" field values in an event, hoping that the recipient will figure it out. This forces other teams to implement logic for interpreting changes (‘Did the price increase or decrease?’), which should be the task of the source domain.

How to design autonomous modules?

So, how can we create effective services? The following three steps will help you do so.

Phase I: Business Analysis and Boundary Setting

The goal of this phase is to define what we do to achieve autonomy at the business level.

- Understanding Business Processes (Event Storming):

- Start by visualising and understanding key business processes.

- Focus on identifying significant state changes in the system that are actual business events (e.g., ProductWasReserved) rather than CRUD operations (create, read, update, delete).

- Avoid: Dividing the system according to the database schema (Data-Driven approach).

- Identifying Bounded Contexts:

- Group events and logic that change together or are inextricably linked in business terms.

- These groups will define boundary contexts.

- Avoid: The Shotgun Surgery anti-pattern, in which a single business change requires modifying multiple modules.

- Group events and logic that change together or are inextricably linked in business terms.

- Defining Single Source of Truth (SSOT):

- For each bounded context, define what key business question it must be able to answer (e.g., ‘What is the customer's current creditworthiness?’).

- This module becomes the single source of truth for this information, encapsulating the logic and data necessary to answer the question.

- Avoid: The anti-patterns of Feature Envy and Data Envy, where modules envy each other's data or logic.

- Verifying Dependencies (Minimising SPOF):

- Analyse how modules communicate with each other (dynamic diagram).

- Aim to minimise synchronous dependencies and ensure that the failure of one element does not cause a cascading failure of the entire system (minimum Single Point of Failure).

- Avoid: Microservice Orgies, where everyone calls everyone else, leading to a distributed monolith architecture.

Phase II: Communication Design (Boundary Tightness)

This phase focuses on how communication should proceed in order to maintain autonomy.

- Choosing Asynchronous Communication (EDA):

- Replace synchronous calls (e.g., REST/RPC) with asynchronous transmission of facts (events) via a message broker.

- Avoid: Synchronous communication (HTTP/RPC) between contexts unless absolutely necessary.

- Defining "Thick" Business Events:

- Events must describe a business fact (what happened in the sender's domain? e.g., UserBidPriceX).

- Events should be abstract and understandable to the business.

- Avoid: Sending ‘thin’ or technical events that describe low-level changes in the database (e.g., FieldValuePriceChanged) - this is context leakage.

- Designing Consumers and Local Projections:

- Event recipients should respond to facts by building and maintaining their own local projections of the data (local state) needed for their work.

- This allows the consumer module to operate autonomously (in accordance with Eventual Consistency), even if the sender is temporarily unavailable.

- Avoid: Provoking an SPOF Avalanche by forcing consumers to query the sender synchronously for details after receiving an event.

Phase III: Implementation and Deployment Decision (Architectural Drivers)

This is when we decide whether the logical module will become a microservice (deployment unit).

- Verification of State Change and Scaling:

- Question: Does the module change state and require a specific SLA (e.g., high availability) or is it computationally expensive?

- Decision: If the answer is YES (you need independent scaling of this part of the logic) -> Microservice (separate deployment).

- Organisational Requirements Verification:

- Question: Does the autonomous team need to be able to deploy this module independently, without coordination and regression testing with other teams?

- Decision: If the answer is YES -> Microservice (justified by team scaling).

- Statelessness Verification (Pure Transform):

- Question: Is the module a pure, stateless transform of input to output (e.g., validation logic, utils)?

- Decision: If the answer is YES, consider using a Library Component in a monolith or a Serverless Function (if justified by the cost of network communication compared to the cost of computation).

Are you certain you need a microservice?

Finally, it is worth asking yourself whether it makes sense to separate services. Microservices are primarily an organisational pattern used to scale people's work, not just code. If you have one team maintaining ten microservices, you are doing yourself a disservice.

Separating code into a separate service makes sense when you need independent scaling (because a given module consumes a lot of CPU), have specific SLA requirements, or want to separate technology. Otherwise, a well-modularised monolith (where modules communicate via API within the process, rather than over the network) will often be more efficient and easier to maintain. Remember that bad code in a monolith is just bad code. Bad code in microservices is a distributed system that cannot be debugged.

Happy SSOT-ing!