Rails Performance Optimisation - Understanding GVL in practice

In Ruby on Rails, where scalability and efficiency play a huge role, understanding all the nuances of performance is simply crucial. Often, when we talk about the “speed” of an application, we don't specify what that really means. In this article, we'll delve into these concepts, and I'll try to give practical tips on how to measure and improve the performance of your Rails applications.

Global VM Lock (GVL) and its impact

Global VM Lock (GVL), also known as GIL (Global Interpreter Lock) in Python, is a mechanism in the Ruby interpreter (CRuby) that ensures that only one Ruby thread can execute Ruby code at a given moment. This means that even though your process has multiple threads, true parallel execution of Ruby code on multiple processor cores is impossible.

In practice, GVL becomes a bottleneck in CPU-bound applications, i.e., those that spend most of their time on intensive calculations rather than waiting for input/output (I/O) operations. In such situations, adding more threads to servers, such as Puma, which uses threads by default, can actually worsen performance rather than improve it. This is because threads compete for GVL, leading to more contention and unnecessary delays.

For CPU-bound applications, multi-process servers (such as Unicorn or Pitchfork's proprietary version) have an advantage. After all, each process has its own Ruby interpreter instance and its own GVL, allowing full use of multiple processor cores. Even if the processes initially consume more memory, the copy-on-write mechanism in Linux operating systems allows a large portion of the memory to be shared between processes, eliminating this disadvantage.

Code example: Simulation of competition for GVL

Want to see how GVL affects CPU-bound applications? Take a look at a simple example. Imagine a computationally intensive function that you intend to run in multiple threads.

# This method simulates a CPU-intensive task

def cpu_intensive_task

sum = 0

10_000_000.times { sum += rand }

sum

end

# Measure time for a single thread

start_time = Time.now

cpu_intensive_task

puts "Single thread time: #{Time.now - start_time} seconds"

# Measure time for multiple threads

threads = []

start_time = Time.now

4.times do

threads << Thread.new { cpu_intensive_task }

end

threads.each(&:join)

puts "Multi-thread time (with GVL): #{Time.now - start_time} seconds"

# Single thread time: 0.271238 seconds

# Multi-thread time (with GVL): 1.036789 secondsWhen you run this code, you will see that the execution time for multiple threads is not proportionally shorter than for a single thread. Sometimes it is even longer, due to the overhead associated with thread management and competition for GVL. This is GVL in action!

Distinguishing between throughput and latency

When discussing performance, we often use the word “faster” without specifying what it really means. It is important to distinguish between these two concepts:

- Latency is the time it takes for the system to process a single request. Low latency means that the user gets a quick response to their individual query. This is important for the overall experience and interactivity.

- Throughput is the number of requests that the system can handle in a given time. High throughput is crucial when considering scalability and the system's ability to handle multiple concurrent users.

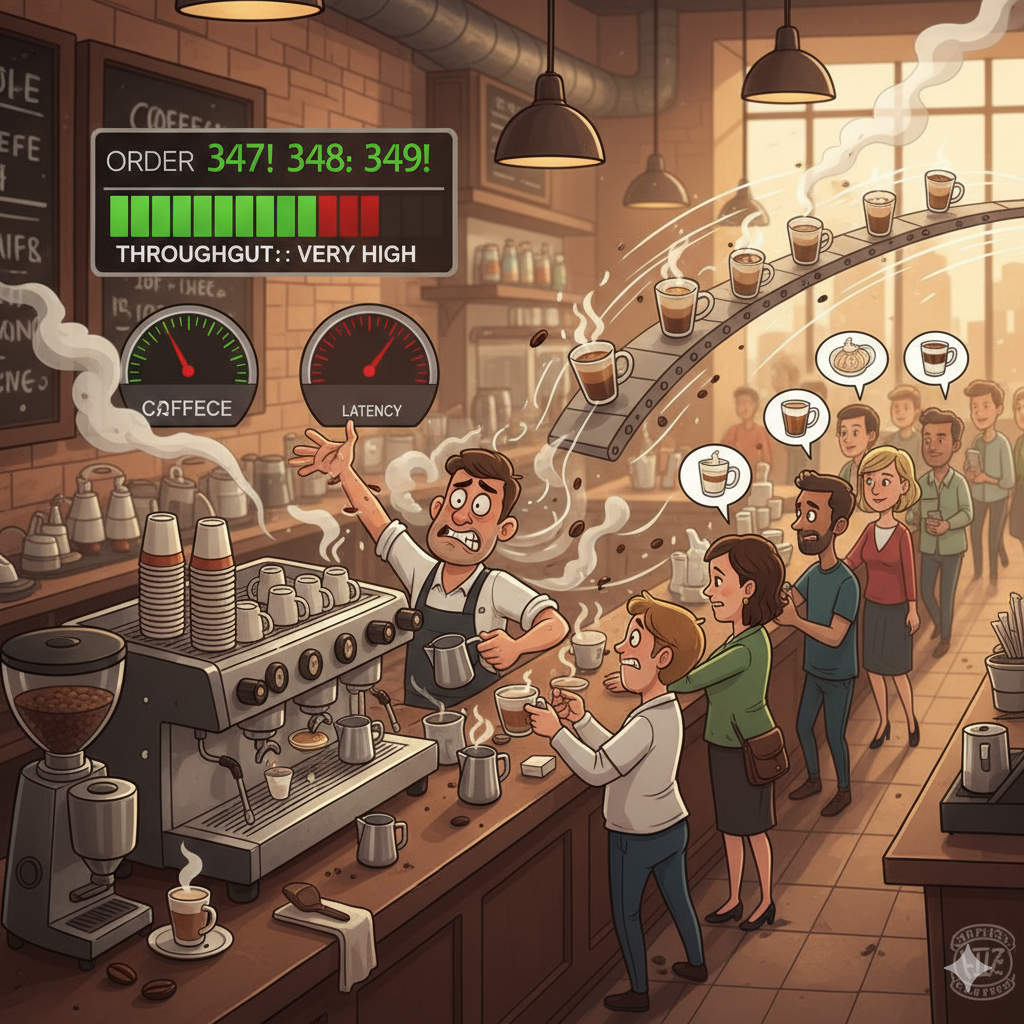

Imagine a coffee shop. Low latency means that the barista quickly prepares a single order, such as a coffee. High throughput, on the other hand, is a situation where the cafe can serve a lot of customers at once, even if each of them has to wait a little longer for their coffee, because there are several baristas working there, or just one, but an exceptionally efficient one.

The problem arises when we optimize for one and completely ignore the other. In the case of CPU-bound applications, attempting to increase throughput by adding multiple threads to the Puma server can paradoxically increase the latency of individual requests due to competition for GVL. Understanding whether our application is more limited by the processor or by I/O operations is essential to choosing the right optimization strategy and configuring the server properly.

Key strategies for optimizing a Rails server

Here are a few strategies that I think are a good place to start.

Understanding application bottlenecks

Do you know what's most important? Measuring and analyzing where your application spends the most time. Is it waiting for the database? Or is it performing intensive calculations in Ruby? Profiling and monitoring tools are simply indispensable here.

Choosing the right server

- For IO-bound applications (i.e., those that make a lot of requests to external APIs or perform file operations), multi-threaded servers such as Puma can work great. Threads can switch to other tasks when one of them is waiting for I/O operations.

- For CPU-bound applications (those that perform intensive calculations in Ruby), multi-process servers such as Unicorn or forks such as Pitchfork are usually a better choice. They allow you to utilize multiple processor cores.

Offloading to background tasks

Operations that tend to be slow, unreliable, or require communication with external services (such as sending emails, processing images, or integrating with third-party APIs) are best moved to background jobs. You can use tools such as Sidekiq, Resque, or Delayed Job for this. This keeps the HTTP request cycle fast and responsive.

GVL instrumentation

Newer versions of Ruby (3.1/3.2 and above) provide us with an API for instrumenting GVL contention. If you use it, you can thoroughly check whether GVL is the source of performance issues.

Debugging and supporting gems

Many performance issues can be found in dependencies, i.e., gems. Developers should feel free to open and examine gem code (bundle open <gem_name>), debug it, and, if possible, contribute fixes to the open source community. Rails is not “magic”—its code is available and mostly understandable.

Practical measurement and application of optimization in Rails

Understanding the theory of GVL, throughput, and latency is one thing, but the real value comes when we can apply this knowledge in practice. Let me show you how to measure and implement optimizations in your Rails applications.

Measuring performance: where to start?

Before you even begin optimizing, you need to know what your bottleneck is. “Optimization without measurement is guesswork”, as programmers say, and it's hard to disagree with that.

APM - Application Performance Monitoring

This is an absolute must. Tools such as New Relic, Datadog APM, AppSignal, and Scout APM automatically instrument your Rails application, collecting a wealth of data about:

- request response time (latency), i.e., how long it takes to process a single HTTP request,

- throughput, i.e., how many requests per minute/second your application can handle,

- time spent in the database, i.e., how long database queries take,

- time spent in Ruby code, i.e., how much time processor calculations take,

- external API calls, i.e., how long queries to external services take,

- memory and CPU usage, i.e., general server resource metrics. APMs are indispensable if you want to quickly find the slowest endpoints, database queries (watch out for N+1 queries!), and I/O operations.

Server logs

Your logs, especially those from production, contain valuable information about the duration of each request. You can use tools such as Logstash with Kibana (ELK Stack) or Grafana Loki to aggregate and analyze logs on a large scale.

Profiling tools

- StackProf or rbspy – allow you to profile Ruby code in a local or test environment. They let you see which methods consume the most CPU time. This is a great way to identify CPU-bound code fragments.

- Rack Mini Profiler – a gem that adds a preview of the execution time of various parts of a request to the page, such as database queries or view rendering. It is ideal for quick analysis during development.

GVL monitoring

Starting with Ruby 3.1/3.2, you can instrument GVL. In practice, APM tools often do this for you, but if you want to dig deeper, you can use built-in mechanisms like Thread.exclusive or libraries that monitor how much time a thread spends waiting for GVL.

Applying optimizations: How to proceed?

Once you know where the problem lies, you can apply the appropriate strategies.

For io-bound applications (limited by input/output operations)

- Threads – if your application spends a lot of time waiting (for example, for a database or external API), a multi-threaded server such as Puma is a good choice. Set the appropriate number of threads (

WEB_CONCURRENCY/RAILS_MAX_THREADS) – usually somewhere between 5 and 16 threads per process. - Asynchronous database queries – in Rails 7 and newer versions, you can use asynchronous Active Record queries. This prevents the main thread from being blocked while the application waits for the database.

# Example of asynchronous query in Rails 7+

# This assumes you have configured your database adapter for async operations

class PostsController < ApplicationController

def index

# This will fetch posts asynchronously without blocking the main thread

@posts = Post.all.load_async

render :index

# async_count

# async_sum

# async_minimum

# async_maximum

# async_average

# async_pluck

# async_pick

# async_ids

# async_find_by_sql

# async_count_by_sql

end

end

- HTTP Clients – use efficient HTTP libraries, such as HTTP.rb or Faraday with appropriate adapters. Also, remember about timeouts and retries.

- Caching – cache data aggressively, especially data that comes from external sources or rarely changes. Rails has built-in caching mechanisms such as Memcached and Redis.

# Example of caching in Rails

class Product < ApplicationRecord

def self.expensive_calculation(product_id)

Rails.cache.fetch("product_#{product_id}_expensive_data", expires_in: 1.hour) do

# Simulate a long-running calculation or external API call

sleep(2)

"Data for product #{product_id} calculated at #{Time.current}"

end

end

end- Background Jobs – this is the most important strategy for IO-bound operations! Move all operations that are not necessary for immediate response to HTTP requests to background tasks. Sending emails, processing photos, communicating with payment systems – all of this should happen in the background. Use Sidekiq, Resque, or Delayed Job.

# Example of a background job using Sidekiq

class WelcomeEmailJob < ApplicationJob

queue_as :default

def perform(user_id)

user = User.find(user_id)

# Simulate sending an email

puts "Sending welcome email to #{user.email}"

sleep(5) # This won't block the web request

puts "Welcome email sent!"

end

endFor CPU-bound applications

- Processes instead of threads – if your APM shows that you spend most of your time in “Ruby code” (rather than in the database or I/O), you are probably CPU-bound. In this case, consider using the Unicorn server or a fork such as Pitchfork instead of Puma. Unicorn uses multiple processes, each with a single execution thread. This allows you to take full advantage of your CPU cores without competing for GVL.

- Code Profiling – use tools such as StackProf to find specific methods and loops that consume the most CPU time.

- Algorithm Optimization – once you find the “hot” spots in your code, focus on optimizing your algorithms. Sometimes rewriting a piece of code in C (via C extensions gems) or using JIT (Just-In-Time compiler, as in Ruby 3.x) can help, but start with optimization at the Ruby level.

- Offloading Computations – if possible, offload computationally intensive tasks to the database (for example, using SQL or materialized views) or to other, more efficient languages or services.

Context is key

The concept of “fast” is relative. Your optimization goals should always be based on business needs:

- Cost vs. Performance – sometimes it's cheaper to just buy a more powerful server (scale up) than to spend weeks on minor code optimizations. Remember, developer time costs money!

- User Experience – do users actually notice the slowdown? Does it affect conversion or their satisfaction?

- System-wide bottlenecks – optimizing one element (e.g., a Rails server) may not make sense if the real problem is the database, network, or external API. Always look at the system as a whole.

Continuous monitoring and iteration

Optimization is not a one-time activity, but rather a continuous process:

- Set alerts – configure alerts in your APM to receive notifications when latency or throughput exceeds set thresholds.

- Regular reviews – periodically analyze performance reports. This will help you find new issues that arise as your application grows and traffic increases.

- Load testing – before you make major changes or expect increased traffic, perform load testing (for example, using tools such as JMeter, Locust, or k6). This will allow you to check how the system performs under load.

Summary

Optimizing a Rails server is a complex process that requires a deep understanding of both the application architecture and the basic mechanisms of the Ruby language. It's not just about being “fast”, but above all about being “fast enough” for specific business needs. You need to accurately measure and understand both latency and throughput. Eliminating bottlenecks, choosing the right server configuration, and strategically using background tasks are the foundations of an effective, scalable Rails application.

Happy optimizing!