Snapshot vs Event Sourcing: Why does your system lie about the past?

Imagine you are conducting an investigation. You enter a room where there is an overturned chair and a broken glass. This is the current state. But do you know who knocked the chair over? Did the glass fall by itself, or did someone throw it? Or maybe the chair has been lying there for a week? When you look at a typical database in the architecture we all know and (sometimes) love, all you see is this mess. You don't see the surveillance video. You see a snapshot. And this is where we need to talk about why your UPDATE is destroying your company's business value.

The problem with the snapshot model

Most of us grew up on the CRUD model. Create, Read, Update, Delete. It's the bread and butter of every backend developer. We design tables, normalize data, and rejoice that we have a “single source of truth.” But is that really the case? Let's look at a simple logistics example that we often forget in the heat of the battle with deadlines.

Imagine a package in a warehouse. In the snapshot (relational) model, we have a shipments table with a status column. The package enters the warehouse, and the status changes to RECEIVED. Then it is packed and ready to go, so you do an UPDATE to READY_TO_SHIP. The courier arrives, but it turns out that the package does not fit in the van. The courier leaves, and the warehouse worker scans the package again as received. The status returns to RECEIVED.

For the database, everything is fine. The record exists, the status is correct. But from a business perspective, we have just lost critical information. The system tells us that the package is simply “in” the warehouse. It does not tell us that there was already an attempt to ship it, that we wasted the warehouse worker's time, and that we should probably charge the customer twice for logistics services. UPDATE is a destructive operation. It overwrites history, leaving us with amnesia. I know what you're thinking – “I can add a history table.” We'll talk later about why this is a prosthesis and not a solution.

What is event sourcing anyway?

Event Sourcing is a paradigm shift that requires us to unlearn thinking in terms of tables. In this approach, we do not store the current state of an object as the main source of data. We store the sequence of events that led to that state. The state is not something stored on disk, it is the result of a function. If you remember the reduce (or left fold) operation from your studies or functional programming, this is the heart of Event Sourcing.

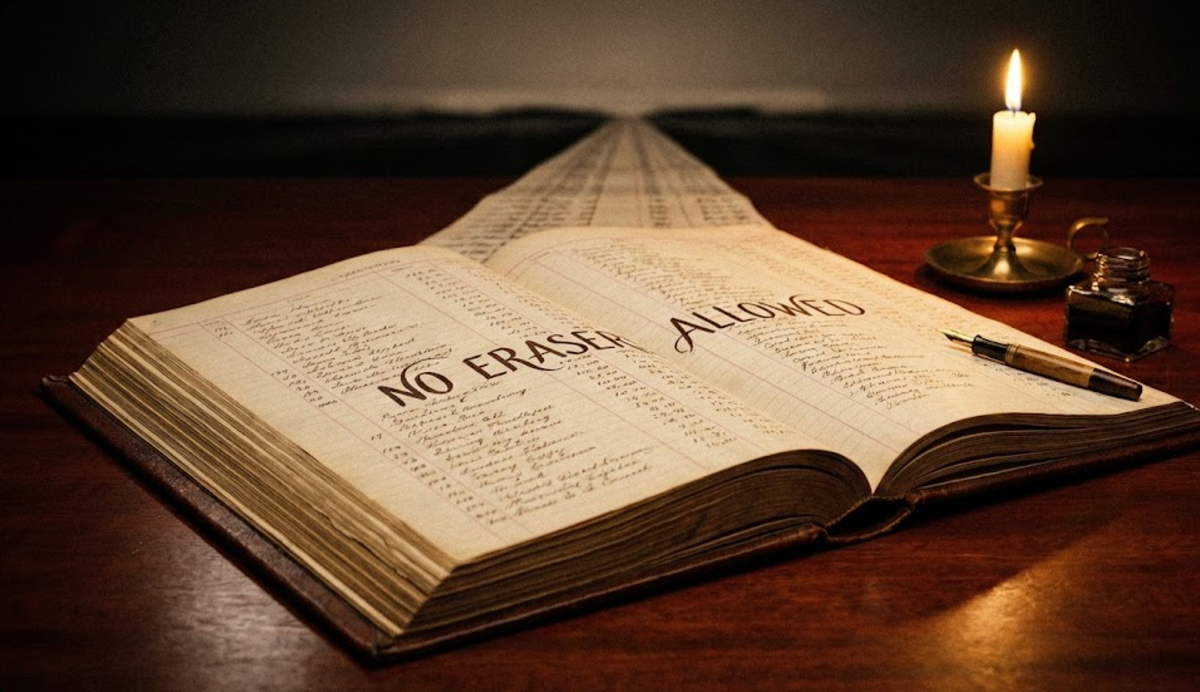

In this model, our database operates in Append-Only mode. There is no room for UPDATE or DELETE in the traditional sense. Events are immutable. Once the fact that “The Package Has Been Accepted” is recorded, it remains true forever. If we make a mistake, we emit a corrective event, rather than erasing the past with correction fluid.

Anatomy of an event in code

Instead of looking at dry definitions, let's take a look at what an event implementation in Ruby might look like. Using popular libraries such as Rails Event Store, we define an event as a simple data structure carrying business intent.

require 'rails_event_store'

class ShipmentReceived < RailsEventStore::Event

# This event represents a fact that happened in the past.

# It contains strict business data, not just table columns.

end

# Usage example:

event = ShipmentReceived.new(

data: {

shipment_id: "123-abc",

warehouse_id: "WAW-01",

weight: 15.5

},

metadata: { # that data is filled by gem, but here is an example

timestamp: Time.now.utc,

correlation_id: "req-789", # Tracks the whole request flow

causation_id: "cmd-456", # What command triggered this event

user_id: 34

}

)Note that in addition to the data itself (data), we have powerful context in metadata. Thanks to correlation_id and causation_id, we are able to trace exactly why a given change occurred, who caused it, and in which business process it took place. In a typical CRUD model, this information is usually lost in application logs, if it is collected at all.

State recreation, or time travel

Now that we have a stream of events, how do we obtain the current state? We're not going to query the database for a million events every time we want to display the status of a package on the front end (although technically it is possible). We build what is called a projection or aggregate.

Simply put, we take an empty object and “apply” subsequent events from the stream to it. Here's how it might look in pure Ruby:

class Shipment

attr_reader :status, :history_log

def initialize

@status = :unknown

@history_log = []

end

# We replay history to rebuild the state

def apply(events)

events.each do |event|

case event

when ShipmentReceived

@status = :received

@history_log << "Received at #{event.metadata[:timestamp]}"

when ShipmentReadyToShip

@status = :ready_to_ship

when DeliveryFailed

# Here is the business value!

# We know it failed, even if the next state is 'received' again.

@history_log << "Delivery failed: #{event.data[:reason]}"

@status = :received

end

end

self

end

end

# Reconstructing state from the stream

events_from_db = [

ShipmentReceived.new(...),

ShipmentReadyToShip.new(...),

DeliveryFailed.new(data: { reason: "Package too big" })

]

shipment = Shipment.new.apply(events_from_db)

puts shipment.status

# Output: :received (Matches current state)

puts shipment.history_log

# Output includes the failure! We didn't lose that info.This approach gives us something invaluable – the ability to travel back in time. We can restore the system to any point in the past by simply applying events to a specific date. Debugging becomes trivial because you don't have to guess how the user caused the system to crash. You have a precise sequence of their actions saved.

Why are audit tables a dead end?

A common counterargument is: “I can use a gem like paper_trail or an audit table.” Yes, you can. But audit tables have two major drawbacks. First, they are technical, not business-oriented. They typically store pairs of old_value and new_value. When the business asks, “Why did our best customer cancel?”, the audit table will tell you, “The status field changed from 5 to 9.” Event Sourcing will tell you, “The event PremiumCustomerSupportCallFailed occurred, followed by SubscriptionCancelled.”

Second, audit and history tables are often “beside” the main application. It's easy to forget to update them, especially with manual interventions in the database. In Event Sourcing, an event is the only way to change the state. If there is no event, the change does not exist. Auditability comes free of charge, built into the system architecture, rather than stuck on with adhesive tape.

Fear of eventual consistency

Many engineers fear Event Sourcing because of the mythical “eventual consistency.” We are afraid that the user will save something and not see it immediately on the screen. This is a legitimate concern, but in reality it is often exaggerated.

Most implementations, such as the aforementioned Rails Event Store or Marten in the .NET world, allow for synchronous updates of so-called Read Models. You can save an event and update the SQL table used to display data in the same web transaction. Eventual consistency is a powerful tool that allows you to scale your system, but it is not a requirement that you must adopt from day one. You can start with synchronous processing, taking advantage of the rich event history, and switch to asynchronous processing only where performance requires it.

Summary

Event Sourcing is not a silver bullet for every problem. It requires learning, a change in mindset, and a more cautious approach to data migration (since we cannot delete data, we must correct it with new events). However, in systems where history, business intent, and behavioral analytics are key, the snapshot model simply does not work. Stop letting your system lie about the past. Start treating data as history, not as a draft that you keep erasing with an eraser.

Happy appending!